As the use of web-based services continues to increase, it’s becoming more and more crucial to ensure that your application can handle great influxes of requests from users. Rate limiting is an essential tool that helps you regulate the number of requests that your application will process within a specified time frame. By using rate limiting, you can prevent your application from becoming overwhelmed and ensure that your service remains available to all users.

In this article, we’ll explore the fundamentals of rate limiting, different types of rate limiting algorithms, and several techniques and libraries for implementing rate limiting in Go applications. By the end of this tutorial, you’ll have a clear understanding of how and why to rate limit your Go applications.

To follow along with this article, you‘ll need:

Jump ahead:

What is rate limiting?

Essentially, rate limiting is the process of controlling how many requests your application users can make within a specified time frame. There are a few reasons you might want to do this, for example:

- Ensuring that your application will function regardless of how much incoming traffic it receives

- Placing usage limits on some users, like implementing a capped free tier or a Fair Use policy

- Protecting your application from DoS (Denial of Service) and other cyber attacks

For these reasons, rate limiting is something all web applications have to integrate sooner or later. But how does it work? It depends. There are several algorithms that developers can use when rate limiting their applications.

Token bucket algorithm

In the token bucket algorithm technique, tokens are added to a bucket, which is some sort of storage, at a fixed rate per time. Each request the application processes consumes a token from the bucket. The bucket has a fixed size, so tokens can’t pile up infinitely. When the bucket runs out of tokens, new requests are rejected.

Leaky bucket algorithm

In the leaky bucket algorithm technique, requests are added to a bucket as they arrive and removed from the bucket for processing at a fixed rate. If the bucket fills up, additional requests are either rejected or delayed.

Fixed window algorithm

The fixed window algorithm technique tracks the number of requests made in a fixed time window, for example, every five minutes. If the number of requests in a window exceeds a predetermined limit, other requests are either rejected or delayed until the beginning of the next window.

Sliding window algorithm

Like the fixed window algorithm, the sliding window algorithm technique tracks the number of requests over a sliding window of time. Although the size of the window is fixed, the start of the window is determined by the time of the user’s first request instead of arbitrary time intervals. If the number of requests in a window exceeds a preset limit, subsequent requests are either dropped or delayed.

Each algorithm has its unique advantages and disadvantages, so choosing the appropriate technique depends on the needs of the application. With that said, the token and leaky bucket variants are the most popular forms of rate limiting.

Implementing rate limiting in Go applications

In this section, we’ll go through some step-by-step, practical instructions on how to rate limit your Go application. First, we’ll create the web application that we’ll use to implement rate limiting. Start by creating a file called limit.go and add the following code to it:

package main

import (

"encoding/json"

"log"

"net/http"

)

type Message struct {

Status string `json:"status"`

Body string `json:"body"`

}

func endpointHandler(writer http.ResponseWriter, request *http.Request) {

writer.Header().Set("Content-Type", "application/json")

writer.WriteHeader(http.StatusOK)

message := Message{

Status: "Successful",

Body: "Hi! You've reached the API. How may I help you?",

}

err := json.NewEncoder(writer).Encode(&message)

if err != nil {

return

}

}

func main() {

http.HandleFunc("/ping", endpointHandler)

err := http.ListenAndServe(":8080", nil)

if err != nil {

log.Println("There was an error listening on port :8080", err)

}

}

The app you just built is a one-endpoint JSON API. The code above defines:

- A server

- A simple struct type for the responses of the API

- A

/pingendpoint - A handler that writes a simple acknowledgment response when the endpoint is hit

Now, we’ll rate limit the endpoint using middleware that implements the token bucket algorithm. Although we could write it entirely from scratch, creating a production-ready implementation of the token-bucket algorithm would be quite complex and time-consuming.

Instead, we’ll use Go’s low-level implementation with the x/time/rate package. Install it with the following terminal command:

go get golang.org/x/time/rate

Then, add the following path to your import statements:

"golang.org/x/time/rate"

Add the following function to limit.go:

func rateLimiter(next func(w http.ResponseWriter, r *http.Request)) http.Handler {

limiter := rate.NewLimiter(2, 4)

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

if !limiter.Allow() {

message := Message{

Status: "Request Failed",

Body: "The API is at capacity, try again later.",

}

w.WriteHeader(http.StatusTooManyRequests)

json.NewEncoder(w).Encode(&message)

return

} else {

next(w, r)

}

})

}

The first thing to address in this function is the NewLimiter function from the rate package. According to the documentation, NewLimiter(r, b) returns a new limiter that allows events up to rate r and permits bursts of at most b tokens.

Basically, NewLimiter() returns a struct that will allow an average of r requests per second and bursts, which are strings of consecutive requests, of up to b. Now that we understand that, the rest follows the standard middleware pattern. The middleware creates the limiter struct and then returns a handler created from an anonymous struct.

The anonymous function uses the limiter to check if this request is within the rate limits with limiter.Allow(). If it is, the anonymous function calls the next function in the chain. If the request is out of limits, the anonymous function returns an error message to the client.

Next, use the middleware by changing the line that registers your handler with the default servemux:

func main() {

http.Handle("/ping", rateLimiter(endpointHandler))

err := http.ListenAndServe(":8080", nil)

if err != nil {

log.Println("There was an error listening on port :8080", err)

}

}

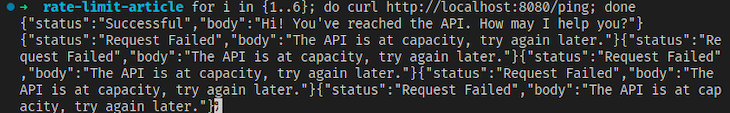

Now, run limit.go and fire off six requests to the ping endpoint in succession with cURL:

for i in {1..6}; do curl http://localhost:8080/ping; done

You should see that the burst of four requests was successful, but the last two exceeded the rate limit and were rejected by the application:

Per-client rate limiting

Although our current middleware works, it has one glaring flaw: the application is being rate limited as a whole. If one user makes a burst of four requests, all other users will be unable to access the API. We can correct this by rate limiting each user individually by identifying them with some unique property. In this tutorial, we’ll use IP addresses, but you could easily substitute this with something else.

But how? We’ll follow an approach inspired by Alex Edwards. Inside your middleware, we’ll create a separate limiter for each IP address that makes a request, so each client has a separate rate limit.

We’ll also store the last time a client made a request, so once a client hasn’t made a request after a certain amount of time, you can delete their limiter to conserve your application’s memory. The last piece of the puzzle is to use a mutex to protect the stored client data from concurrent access.

Below is the code for the new middleware:

func perClientRateLimiter(next func(writer http.ResponseWriter, request *http.Request)) http.Handler {

type client struct {

limiter *rate.Limiter

lastSeen time.Time

}

var (

mu sync.Mutex

clients = make(map[string]*client)

)

go func() {

for {

time.Sleep(time.Minute)

// Lock the mutex to protect this section from race conditions.

mu.Lock()

for ip, client := range clients {

if time.Since(client.lastSeen) > 3*time.Minute {

delete(clients, ip)

}

}

mu.Unlock()

}

}()

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

// Extract the IP address from the request.

ip, _, err := net.SplitHostPort(r.RemoteAddr)

if err != nil {

w.WriteHeader(http.StatusInternalServerError)

return

}

// Lock the mutex to protect this section from race conditions.

mu.Lock()

if _, found := clients[ip]; !found {

clients[ip] = &client{limiter: rate.NewLimiter(2, 4)}

}

clients[ip].lastSeen = time.Now()

if !clients[ip].limiter.Allow() {

mu.Unlock()

message := Message{

Status: "Request Failed",

Body: "The API is at capacity, try again later.",

}

w.WriteHeader(http.StatusTooManyRequests)

json.NewEncoder(w).Encode(&message)

return

}

mu.Unlock()

next(w, r)

})

}

This is a lot of code, but you can break it down into two rough sections: the setup work in the body of the middleware, and the anonymous function that does the rate limiting. Let’s look at the setup section first. This part of the code does a few things:

- Defines a struct type called

clientto hold a limiter and thelastSeentime for each client - Creates a mutex and a map of strings and pointers to

clientstructs calledclients - Creates a Go routine that runs once a minute and removes

clientstructs, whoselastSeentime is greater than three minutes from the current time from theclientsmap

The next part is the anonymous function. The function:

- Extracts the IP address from the incoming request

- Checks if there’s a limiter for the current IP address in the map and adds one if there isn’t

- Updates the

lastSeentime for the IP address - Uses the limiter specific to the IP address to rate limit the request in the same way as the previous rate limiter

With that, you’ve implemented middleware that will rate limit each client separately! The last step is simply to comment out rateLimiter and replace it with perClientRateLimiter in your main function. If you repeat the cURL test from earlier with a couple of different devices, you should see each being limited separately.

Rate limiting with Tollbooth

The approach you just learned is lightweight, functional, and great all around. But, depending on your use case, it might not have enough functionality for you, and you might not have the time or willingness to expand it. That brings us to the last rate limiting approach using Tollbooth:

Tollbooth is a Go rate limiting package built and maintained by Didip Kerabat. It uses the token bucket algorithm as well as x/time/rate under the hood, has a clean and simple API, and offers many features, including rate limiting by:

- Request’s remote IP

- Path

- HTTP request methods

- Custom request headers

- Basic auth usernames

Using Tollbooth is simple. Start by installing it with the terminal command below:

go get github.com/didip/tollbooth/v7

Then, add the following path to your import statements:

"github.com/didip/tollbooth/v7"

Comment out perClientRateLimiter and replace your main function with the following code:

func main() {

message := Message{

Status: "Request Failed",

Body: "The API is at capacity, try again later.",

}

jsonMessage, _ := json.Marshal(message)

tlbthLimiter := tollbooth.NewLimiter(1, nil)

tlbthLimiter.SetMessageContentType("application/json")

tlbthLimiter.SetMessage(string(jsonMessage))

http.Handle("/ping", tollbooth.LimitFuncHandler(tlbthLimiter, endpointHandler))

err := http.ListenAndServe(":8080", nil)

if err != nil {

log.Println("There was an error listening on port :8080", err)

}

}

And that’s it! You’ve rate limited your endpoint at a rate of one request per second. Your new main function creates a one request per second limiter with tollbooth.NewLimiter, specifies a custom JSON rejection message, and then registers the limiter and handler for the /ping endpoint.

Performing the cURL test again should give you the following output:

For examples of how to use the rest of Tollbooth’s features, check out its documentation.

Libraries that implement other algorithms

Depending on your use case, the token bucket algorithm might not be suitable for your needs. Below is a collection of Go libraries that use other algorithms for you to explore:

Finally, the limiters library provides implementations of all four algorithms.

Conclusion

Rate limiting is a fundamental tool for managing traffic and preventing the overloading of web-based services. By understanding how to implement rate limiting in your application, you can ensure that your service remains available and performs optimally, even when it experiences high volumes of requests. In this article, we explored rate limiting an example Go application using Tollbooth, a great open source package. But, if you don’t want to use the token bucket algorithm, you can use one of the listed alternatives.

I hope you enjoyed this article, and happy coding!

The post Rate limiting your Go application appeared first on LogRocket Blog.

from LogRocket Blog https://ift.tt/go3e9JB

Gain $200 in a week

via Read more

Source link

Leave a Reply