Join top executives in San Francisco on July 11-12, to hear how leaders are integrating and optimizing AI investments for success. Learn More

A lot has been written about the dangers of generative AI in recent months and yet everything I’ve seen boils down to three simple arguments, none of which reflects the biggest risk I see headed our way. Before I get into this hidden danger of generative AI, it will be helpful to summarize the common warnings that have been floating around recently:

- The risk to jobs: Generative AI can now produce human-level work products ranging from artwork and essays to scientific reports. This will greatly impact the job market, but I believe it is a manageable risk as job definitions adapt to the power of AI. It will be painful for a period, but not dissimilar from how previous generations adapted to other work-saving efficiencies.

- Risk of fake content: Generative AI can now create human-quality artifacts at scale, including fake and misleading articles, essays, papers and video. Misinformation is not a new problem, but generative AI will allow it to be mass-produced at levels never before seen. This is a major risk, but manageable. That’s because fake content can be made identifiable by either (a) mandating watermarking technologies that identify AI content upon creation, or (b) by deploying AI-based countermeasures that are trained to identify AI content after the fact.

- Risk of sentient machines: Many researchers worry that AI systems will get scaled up to a level where they develop a “will of their own” and will take actions that conflict with human interests, or even threaten human existence. I believe this is a genuine long-term risk. In fact, I wrote a “picture book for adults” entitled Arrival Mind a few years ago that explores this danger in simple terms. Still, I do not believe that current AI systems will spontaneously become sentient without major structural improvements to the technology. So, while this is a real danger for the industry to focus on, it’s not the most urgent risk that I see before us.

So, what concerns me most about the rise of generative AI?

From my perspective, the place where most safety experts go wrong, including policymakers, is that they view generative AI primarily as a tool for creating traditional content at scale. While the technology is quite skilled at cranking out articles, images and videos, the more important issue is that generative AI will unleash an entirely new form of media that is highly personalized, fully interactive and potentially far more manipulative than any form of targeted content we have faced to date.

Welcome to the age of interactive generative media

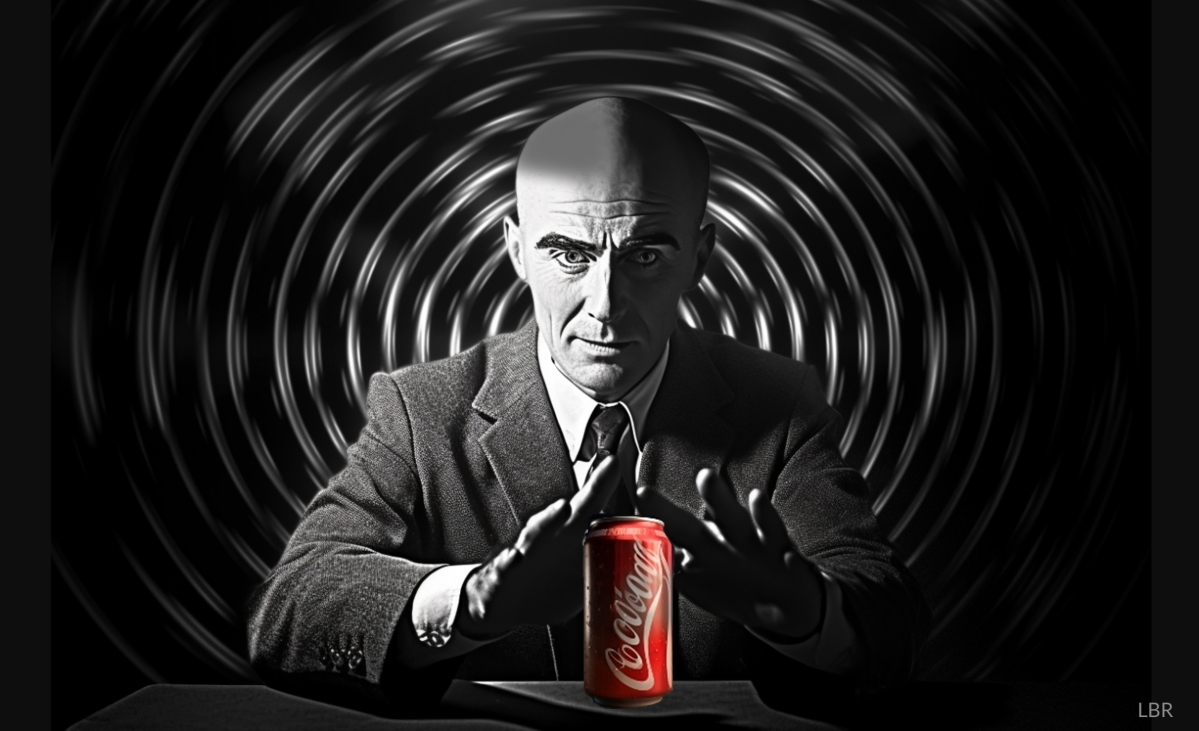

The most dangerous feature of generative AI is not that it can crank out fake articles and videos at scale, but that it can produce interactive and adaptive content that is customized for individual users to maximize persuasive impact. In this context, interactive generative media can be defined as targeted promotional material that is created or modified in real time to maximize influence objectives based on personal data about the receiving user.

This will transform “targeted influence campaigns” from buckshot aimed at broad demographic groups to heat-seeking missiles that can zero in on individual persons for optimal effect. And as described below, this new form of media is likely to come in two powerful flavors, “targeted generative advertising” and “targeted conversational influence.”

Event

Transform 2023

Join us in San Francisco on July 11-12, where top executives will share how they have integrated and optimized AI investments for success and avoided common pitfalls.

Targeted generative advertising is the use of images, videos and other forms of informational content that look and feel like traditional ads but are personalized in real time for individual users. These ads will be created on the fly by generative AI systems based on influence objectives provided by third-party sponsors in combination with personal data accessed for the specific user being targeted. The personal data may include the user’s age, gender and education level, combined with their interests, values, aesthetic sensibilities, purchasing tendencies, political affiliations and cultural biases.

In response to the influence objectives and targeting data, the generative AI will customize the layout, feature images and promotional messaging to maximize effectiveness on that user. Everything down to the colors, fonts and punctuation could be personalized along with the age, race and clothing styles of any people shown in the imagery. Will you see video clips of urban scenes or rural scenes? Will it be set in the fall or spring? Will you see images of sports cars or family vans? Every detail could be customized in real time by generative AI to maximize the subtle impact on you personally.

And because tech platforms can track user engagement, the system will learn which tactics work best on you over time, discovering the hair colors and facial expressions that best draw your attention.

If this seems like science fiction, consider this: Both Meta and Google have recently announced plans to use generative AI in the creation of online ads. If these tactics produce more clicks for sponsors, they will become standard practice and an arms race will follow, with all major platforms competing to use generative AI to customize promotional content in the most effective ways possible.

This brings me to targeted conversational influence, a generative technique in which influence objectives are conveyed through interactive conversation rather than traditional documents or videos.

The conversations will occur through chatbots (like ChatGPT and Bard) or through voice-based systems powered by similar large language models (LLMs). Users will encounter these “conversational agents” many times throughout their day, as third-party developers will use APIs to integrate LLMs into their websites, apps and interactive digital assistants.

For example, you might access a website looking for the latest weather forecast, engaging conversationally with an AI agent to request the information. In the process, you could be targeted with conversational influence — subtle messaging woven into the dialog with promotional goals.

As conversational computing becomes commonplace in our lives, the risk of conversational influence will greatly expand, as paying sponsors could inject messaging into the dialog that we may not even notice. And like targeted generative ads, the messaging goals requested by sponsors will be used in combination with personal data about the targeted user to optimize impact.

The data could include the user’s age, gender and education level combined with personal interests, hobbies, values, etc., thereby enabling real-time generative dialog that is designed to optimally appeal to that specific person.

Why use conversational influence?

If you’ve ever worked as a salesperson, you probably know that the best way to persuade a customer is not to hand them a brochure, but to engage them in face-to-face dialog so you can pitch them on the product, hear their reservations and adjust your arguments as needed. It’s a cyclic process of pitching and adjusting that can “talk them into” a purchase.

While this has been a purely human skill in the past, generative AI can now perform these steps, but with greater skill and deeper knowledge to draw upon.

And while a human salesperson has only one persona, these AI agents will be digital chameleons that can adopt any speaking style, from nerdy or folksy to suave or hip, and can pursue any sales tactic, from befriending the customer to exploiting their fear of missing out. And because these AI agents will be armed with personal data, they could mention the right musical artists or sports teams to ease you into friendly dialog.

In addition, tech platforms could document how well prior conversations worked to persuade you, learning what tactics are most effective on you personally. Do you respond to logical appeals or emotional arguments? Do you seek the biggest bargain or the highest quality? Are you swayed by time-pressure discounts or free add-ons? Platforms will quickly learn to pull all your strings.

Of course, the big threat to society is not the optimized ability to sell you a pair of pants. The real danger is that the same techniques will be used to drive propaganda and misinformation, talking you into false beliefs or extreme ideologies that you might otherwise reject. A conversational agent, for example, could be directed to convince you that a perfectly safe medication is a dangerous plot against society. And because AI agents will have access to an internet full of information, they could cherry-pick evidence in ways that would overwhelm even the most knowledgeable human.

This creates an asymmetric power balance often called the AI manipulation problem in which we humans are at an extreme disadvantage, conversing with artificial agents that are highly skilled at appealing to us, while we have no ability to “read” the true intentions of the entities we’re talking to.

Unless regulated, targeted generative ads and targeted conversational influence will be powerful forms of persuasion in which users are outmatched by an opaque digital chameleon that gives off no insights into its thinking process but is armed with extensive data about our personal likes, wants and tendencies, and has access to unlimited information to fuel its arguments.

For these reasons, I urge regulators, policymakers and industry leaders to focus on generative AI as a new form of media that is interactive, adaptive, personalized and deployable at scale. Without meaningful protections, consumers could be exposed to predatory practices that range from subtle coercion to outright manipulation.

Louis Rosenberg, Ph.D., is an early pioneer in the fields of VR, AR and AI and the founder of Immersion Corporation (IMMR: Nasdaq), Microscribe 3D, Outland Research and Unanimous AI.

DataDecisionMakers

Welcome to the VentureBeat community!

DataDecisionMakers is where experts, including the technical people doing data work, can share data-related insights and innovation.

If you want to read about cutting-edge ideas and up-to-date information, best practices, and the future of data and data tech, join us at DataDecisionMakers.

You might even consider contributing an article of your own!

Source link

Leave a Reply