Donations Make us online

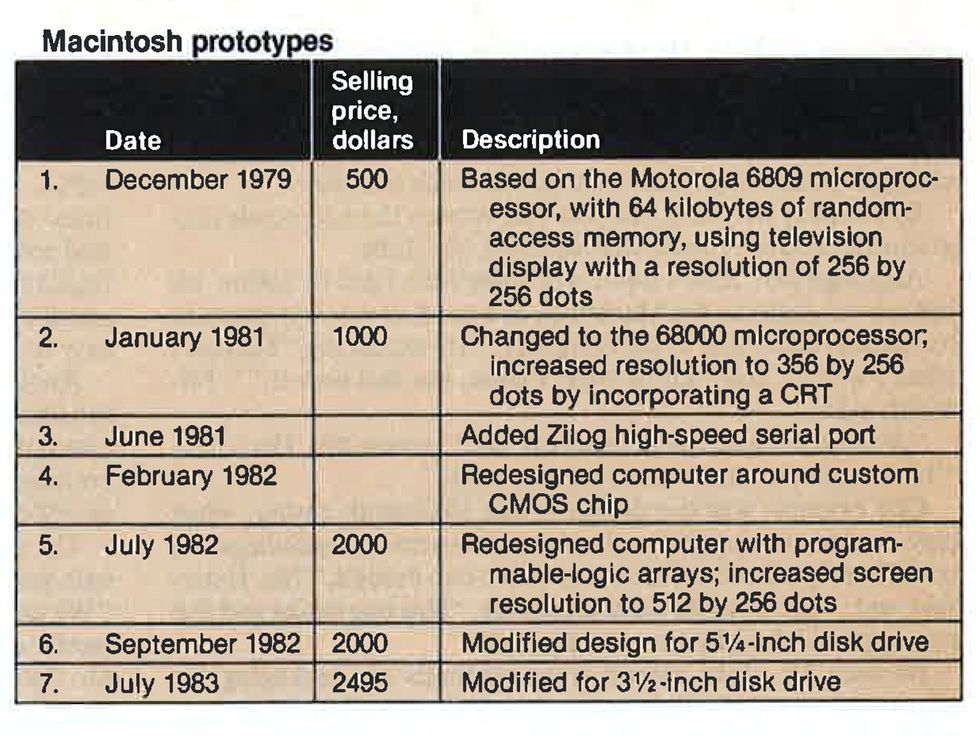

In 1979 the Macintosh personal computer existed only as the pet idea of Jef Raskin, a veteran of the Apple II team, who had proposed that Apple Computer Inc. make a low-cost “appliance”-type computer that would be as easy to use as a toaster. Mr. Raskin believed the computer he envisioned, which he called Macintosh, could sell for US $1000 if it was manufactured in high volume and used a powerful microprocessor executing tightly written software.

Mr. Raskin’s proposal did not impress anyone at Apple Computer enough to bring much money from the board of directors or much respect from Apple engineers. The company had more pressing concerns at the time: the major Lisa workstation project was getting under way, and there were problems with the reliability of the Apple III, the revamped version of the highly successful Apple II.

Although the odds seemed against it in 1979, the Macintosh, designed by a handful of inexperienced engineers and programmers, is now recognized as a technical milestone in personal computing. Essentially a slimmed-down version of the Lisa workstation with many of its software features, the Macintosh sold for $2495 at its introduction in early 1984; the Lisa initially sold for $10,000. Despite criticism of the Macintosh—that it lacks networking capabilities adequate for business applications and is awkward to use for some tasks—the computer is considered by Apple to be its most important weapon in the war with IBM for survival in the personal-computer business.

From the beginning, the Macintosh project was powered by the dedicated drive of two key players on the project team. For Burrell Smith, who designed the Macintosh digital hardware, the project represented an opportunity for a relative unknown to demonstrate outstanding technical talents. For Steven Jobs, the 29-year-old chairman of Apple and the Macintosh project’s director, it offered a chance to prove himself in the corporate world after a temporary setback: although he cofounded Apple Computer, the company had declined to let him manage the Lisa project. Mr. Jobs contributed relatively little to the technical design of the Macintosh, but he had a clear vision of the product from the beginning. He challenged the project team to design the best product possible and encouraged the team by shielding them from bureaucratic pressures within the company.

Burrell Smith and the Early Mac Design

Mr. Smith, who was a repairman in the Apple II maintenance department in 1979, had become hooked on microprocessors several years earlier during a visit to the electronics-industry area south of San Francisco known as Silicon Valley. He dropped out of liberal-arts studies at the Junior College of Albany, New York, to pursue the possibilities of microprocessors—there isn’t anything you can’t do with those things, he thought. Mr. Smith later became a repairman in Cupertino, Calif., where he spent much time studying the cryptic logic circuitry of the Apple II, designed by company cofounder Steven Wozniak.

Mr. Smith’s dexterity in the shop impressed Bill Atkinson, one of the Lisa designers, who introduced him to Mr. Raskin as “the man who’s going to design your Macintosh.” Mr. Raskin replied noncommittally, “We’ll see about that.”

However, Mr. Smith managed to learn enough about Mr. Raskin ‘s conception of the Macintosh to whip up a makeshift prototype using a Motorola 6809 microprocessor, a television monitor, and an Apple II. He showed it to Mr. Raskin, who was impressed enough to make him the second member of the Macintosh team.

But the fledgling Macintosh project was in trouble. The Apple board of directors wanted to cancel the project in September 1980 to concentrate on more important projects, but Mr. Raskin was able to win a three-month reprieve.

Meanwhile Steve Jobs, then vice president of Apple, was having trouble with his own credibility within the company. Though he had sought to manage the Lisa computer project, the other Apple executives saw him as too inexperienced and eccentric to entrust him with such a major undertaking, and he had no formal business education. After this rejection, “he didn’t like the lack of control he had,” noted one Apple executive. “He was looking for his niche.”

Mr. Jobs became interested in the Macintosh project, and, possibly because few in the company thought the project had a future, Mr. Jobs was made its manager. Under his direction, the design team became as compact and efficient as the Macintosh was tobe—a group of engineers working at a distance from all the meetings and paper-pushing of the corporate mainstream. Mr. Jobs, in recruiting the other members of the Macintosh team, lured some from other companies with promises of potentially lucrative stock options.

The Macintosh project “was known in the company as ‘Steve’s folly.’”

With Mr. Jobs at the helm, the project gained some credibility among the board of directors—but not much. According to one team member, it was known in the company as “Steve’s folly.” But Mr. Jobs lobbied for a bigger budget for the project and got it. The Macintosh team grew to 20 by early 1981.

The decision on what form the Macintosh would take was left largely to the design group. At first the members had only the basic principles set forth by Mr. Raskin and Mr. Jobs to guide them, as well as the example set by the Lisa project. The new machine was to be easy to use and inexpensive to manufacture. Mr. Jobs wanted to commit enough money to build an automated factory that would produce about 300 000 computers a year. So one key challenge for the design group was to use inexpensive parts and to keep the parts count low.

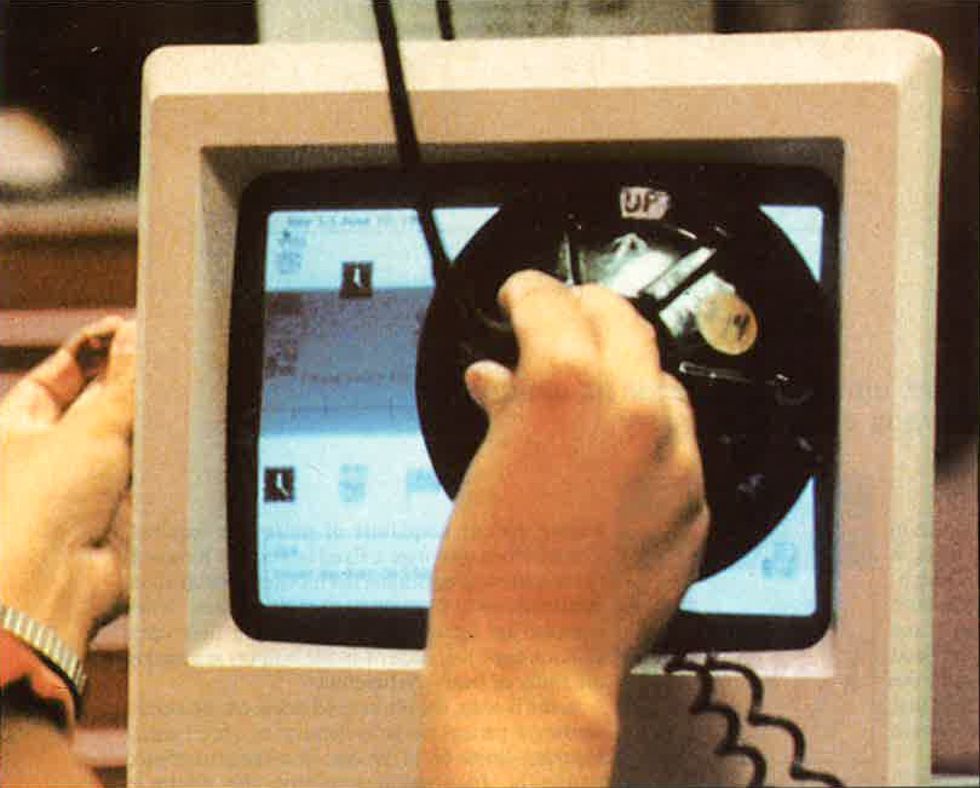

Making the computer easy to use required considerable software for the user-computer interface. The model was, of course, the Lisa workstation with its graphic “windows” to display simultaneously many different programs. “Icons,” or little pictures, were used instead of cryptic computer terms to represent a selection of programs on the screen; by moving a “mouse,’’ a box the size of a pack of cigarettes, the user manipulated a cursor on the screen. The Macintosh team redesigned the software of the Lisa from scratch to make it operate more efficiently, since the Macintosh was to have far less memory than the 1 million bytes of the Lisa. But the Macintosh software was also required to operate quicker than the Lisa software, which had been criticized for being slow.

Defining the Mac as the Project Progressed

The lack of a precise definition for the Macintosh project was not a problem. Many of the designers preferred to define the computer as they went along. “Steve allowed us to crystallize the problem and the solution simultaneously,” recalled Mr. Smith. The method put strain on the design team, since they were continually evaluating design alternatives. “We were swamped in detail,” Mr. Smith said. But this way of working also led to a better product, the designers said, because they had the freedom to seize opportunities during the design stage to enhance the product.

Such freedom would not have been possible had the Macintosh project been structured in the conventional way at Apple, according to several of the designers. “No one tried to control us,” said one. “Some managers like to take control, and though that may be good for mundane engineers, it isn’t good if you are self-motivated.”

Central to the success of this method was the small, closely knit nature of the design group, with each member being responsible for a relatively large portion of the total design and free to consult other members of the team when considering alternatives. For example, Mr. Smith, who was well acquainted with the price of electronic components from his early work on reducing the cost of the Apple II, made many decisions about the economics of Macintosh hardware without time-consuming consultations with purchasing agents. Because communication among team members was good, the designers shared their areas of expertise by advising each other in the working stages, rather than waiting for a final evaluation from a group of manufacturing engineers. Housing all members of the design team in one small office made communicating easier. For example, it was simple for Mr. Smith to consult a purchasing agent about the price of parts if he needed to, because the purchasing agent worked in the same building.

Andy Hertzfeld, who transferred from the Apple II software group to design the Macintosh operating software, noted, “In lots of other projects at Apple, people argue about ideas. But sometimes bright people think a little differently. Somebody like Burrell Smith would design a computer on paper and people would say. ‘It’ll never work.’ So instead Burell builds it lightning fast and has it working before the guy can say anything.”

“When you have one person designing the whole computer, he knows that a little leftover gate in one part may be used in another part.”

—Andy Herzfeld

The closeness of the Macintosh group enabled it to make design tradeoffs that would not have been possible in a large organization, the team members contended. The interplay between hardware and software was crucial to the success of the Macintosh design, using a limited memory and few electronic parts to perform complex operations. Mr. Smith, who was in charge of the computer’s entire digital hardware design, and Mr. Herzfeld became close friends and often collaborated. “When you have one person designing the whole computer,” Mr. Hertzfeld observed, “he knows that a little leftover gate in one part may be used in another part.”

To promote interaction among the designers, one of the first things that Mr. Jobs did in taking over the Macintosh project was to arrange special office space for the team. In contrast to Apple’s corporate headquarters, identified by the company logo on a sign on its well-trimmed lawn, the team’s new quarters, behind a Texaco service station, had no sign to identify them and no listing in the company telephone directory. The office, dubbed Texaco Towers, was an upstairs, low-rent, plasterboard-walled, “tacky-carpeted” place, “the kind you’d find at a small law outfit,’’ according to Chris Espinosa, a veteran of the original Apple design team and an early Macintosh draftee. It resembled a house more than an office, having a communal area much like a living room, with smaller rooms off to the side for more privacy in working or talking. The decor was part college dormitory, part electronics repair shop: art posters, beanbag chairs, coffee machines, stereo systems, and electronic equipment of all sorts scattered about.

“Whenever a competitor came out with a product, we would buy and dismantle it, and it would kick around the office.”

—Chris Espinosa

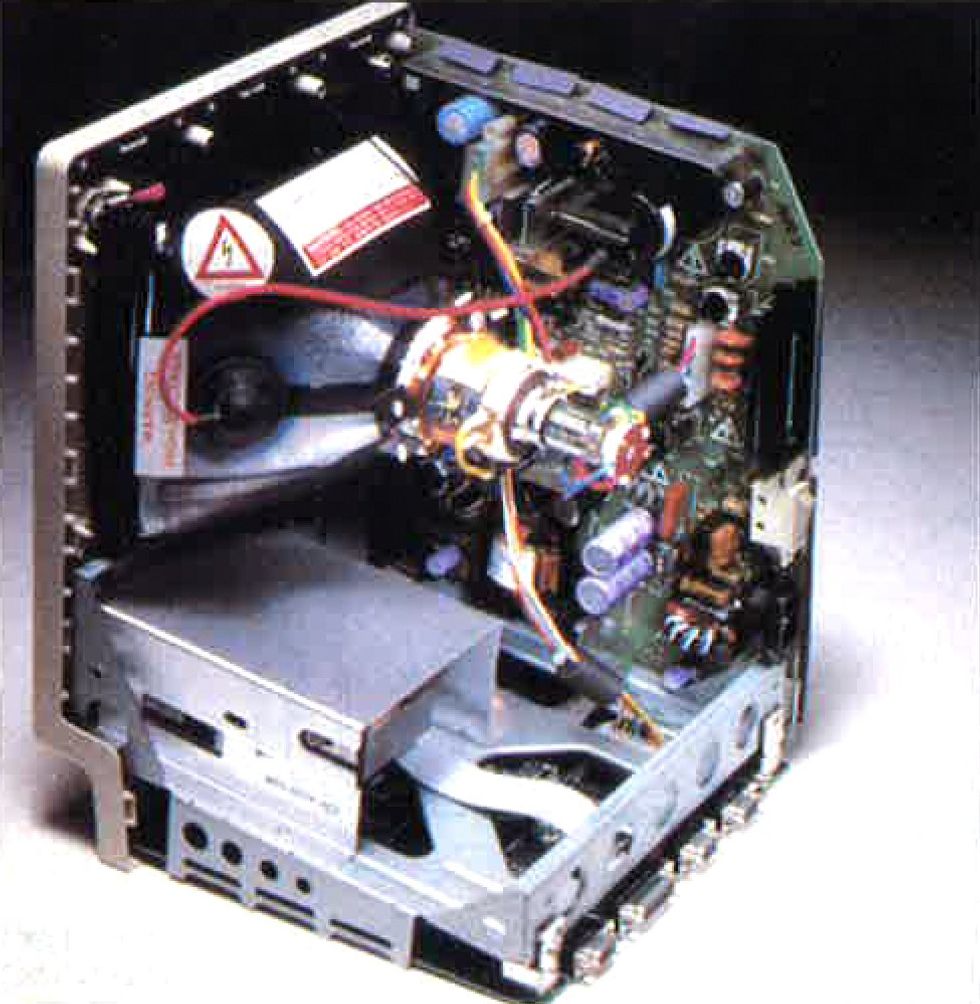

There were no set work hours and initially not even a schedule for the development of the Macintosh. Each week, if Mr. Jobs was in town (often he was not), he would hold a meeting at which the team members would report what they had done the previous week. One of the designers’ sidelines was to dissect the products of their competitors. “Whenever a competitor came out with a product, we would buy and dismantle it, and it would kick around the office,” recalled Mr. Espinosa.

In this way, they learned what they did not want their product to be. In their competitors’ products, Mr. Smith saw a propensity for using connectors and slots for inserting printed-circuit boards—a slot for the video circuitry, a slot for the keyboard circuitry, a slot for the disk drives, and memory slots. Behind each slot were buffers to allow signals to pass onto and off the printed-circuit board properly. The buffers meant delays in the computers’ operations, since several boards shared a backplane, and the huge capacitance required for multiple PC boards slowed the backplane. The number of parts required made the competitors’ computers hard to manufacture, costly, and less reliable. The Macintosh team resolved that their PC would have but two printed-circuit boards and no slots, buffers, or backplane.

A challenge in building the Macintosh was to offer sophisticated software using the fewest and least-expensive parts.

To squeeze the needed components onto the board, Mr. Smith planned the Macintosh to perform specific functions rather than operate as a flexible computer that could be tailored by programmers for a wide variety of applications. By rigidly defining the configuration of the Macintosh and the functions it would perform, he eliminated much circuitry. Instead of providing slots into which the user could insert printed-circuit boards with such hardware as memory or coprocessors, the designers decided to incorporate many of the basic functions of the computer in read-only memory, which is more reliable. The computer would be expanded not by slots, but through a high-speed serial port.

Writing the Mac’s Software

The software designers were faced in the beginning with often-unrealistic schedules. “We looked for any place where we could beg, borrow, or steal code,” Mr. Herzfeld recalled. The obvious place for them to look was the Lisa workstation. The Macintosh team wanted to borrow some of the Lisa’s software for drawing graphics on the bit-mapped display. In 1981, Bill Atkinson was refining the Lisa graphics software, called Quickdraw, and began to work part-time implementing it for the Macintosh.

Quickdraw was a scheme for manipulating bit maps to enable applications programmers to construct images easily on the Macintosh bit-mapped display. The Quickdraw program allows the programmer to define and manipulate a region—a software representation of an arbitrarily shaped area of the screen. One such region is a rectangular window with rounded comers, used throughout the Macintosh software. Quickdraw also allows the programmer to keep images within defined boundaries, which make the windows in the Macintosh software appear to hold data. The programmer can unite two regions, subtract one from the other, or intersect them.

In Macintosh, the Quickdraw program was to be tightly written in assembly-level code and etched permanently in ROM. It would serve as a foundation for higher-level software to make use of graphics.

Quickdraw was “an amazing graphics package,” Mr. Hertzfeld noted, but it would have strained the capabilities of the 6809 microprocessor, the heart of the early Macintosh prototype. Motorola Corp. announced in late 1980 that the 68000 microprocessor was available, but that chip was new and unproven in the field, and at $200 apiece it was also expensive. Reasoning that the price of the chip would come down before Apple was ready to start mass-producing the Macintosh, the Macintosh designers decided to gamble on the Motorola chip.

Another early design question for the Macintosh was whether to use the Lisa operating system. Since the Lisa was still in the early stages of design, considerable development would have been required to tailor its operating system for the Macintosh. Even if the Lisa had been completed, rewriting its software in assembly code would have been required for the far smaller memory of the Macintosh. In addition, the Lisa was to have a multitasking operating system, using complex circuitry and software to run more than one computer program at the same time, which would have been too expensive for the Macintosh. Thus the decision was made to write a Macintosh operating system from scratch, working from the basic concepts of the Lisa. Simplifying the Macintosh operating system posed the delicate problem of restricting the computer’s memory capacity enough to keep it inexpensive but not so much as to make it inflexible.

The Macintosh would have no multitasking capability but would execute only one applications program at a time. Generally, a multitasking operating system tracks the progress of each of the programs it is running and then stores the entire state of each program—the values of its variables, the location of the program counter, and so on. This complex operation requires more memory and hardware than the Macintosh designers could afford. However, the illusion of multitasking was created by small programs built into the Macintosh system software. Since these small programs—such as one that creates the images of a calculator on the screen and does simple arithmetic—operate in areas of memory separate from applications, they can run simultaneously with applications programs.

Embedding Macintosh software in 64 kilobytes of read-only memory increased the reliability of the computer and simplified the hardware [A]. About one third of the ROM software is the operating system. One third is taken up by Quickdraw, a program for representing shapes and images for the bit-mapped display. The remaining third is devoted to the user interface toolbox, which handles the display of windows, text editing, menus, and the like. The user interface of the Macintosh includes pull-down menus, which appear only when the cursor is placed over the menu name and a button on the mouse is pressed. Above, a user examining the ‘file’ menu selects the open command, which causes the computer to load the file (indicated by darkened icon) from disk into internal memory. The Macintosh software was designed to make the toolbox routines optional for programmers; the applications program offers the choice of whether or not to handle an event [B].

Since the Macintosh used a memory-mapped scheme, the 68000 microprocessor required no memory management, simplifying both the hardware and the software. For example, the 68000 has two modes of operation: a user mode, which is restricted so that a programmer cannot inadvertently upset the memory-management scheme; and a supervisor mode, which allows unrestricted access to all of the 68000’s commands. Each mode uses its own stack of pointers to blocks of memory. The 68000 was rigged to run only in the supervisor mode, eliminating the need for the additional stack. Although seven levels of interrupts were available for the 68000, only three were used.

Another simplification was made in the Macintosh’s file structure, exploiting the small disk space with only one or two floppy disk drives. In the Lisa and most other operating systems, two indexes access a program on floppy disk, using up precious random-access memory and increasing the delay in fetching programs from a disk. The designers decided to use only one index for the Macintosh—a block map, located in RAM, to indicate the location of a program on a disk. Each block map represented one volume of disk space.

This scheme ran into unexpected difficulties and may be modified in future versions of the Macintosh, Mr. Hertzfeld said. Initially, the Macintosh was not intended for business users, but as the design progressed and it became apparent that the Macintosh would cost more than expected, Apple shifted its marketing plan to target business users. Many of them add hard disk drives to the Macintosh, making the block-map scheme unwieldy.

By January 1982, Mr. Hertzfeld began working on software for the Macintosh, perhaps the computer’s most distinctive feature, which he called the user-interface toolbox.

The toolbox was envisioned as a set of software routines for constructing the windows, pull-down menus, scroll bars, icons, and other graphic objects in the Macintosh operating system. Since RAM space would be scarce on the Macintosh (it initially was to have only 64 kilobytes), the toolbox routines were to be a part of the Macintosh’s operating software; they would use the Quickdraw routines and operate in ROM.

It was important however, not to handicap applications programmers—who could boost sales of the Macintosh by writing programs for it—by restricting them to only a few toolbox routines in ROM. So the toolbox code was designed to fetch definition functions—routines that use Quickdraw to create a graphic image such as a window—from either the systems disk or an applications disk. In this way, an applications programmer could add definition functions for a program, which Apple could incorporate in later versions the Macintosh by modifying the system disk. “We were nervous about putting (the toolbox) in ROM,” recalled Mr. Hertzfeld, “We knew that after the Macintosh was out, programmers would want to add to the toolbox routines.”

Although the user could operate only one applications program at a time, he could transfer text or graphics from one applications program to another with a toolbox routine called scrapbook. Since the scrapbook and the rest of the toolbox routines were located in ROM, they could run along with applications programs, giving the illusion of multitasking. The user would cut text from one program into the scrapbook, close the program, open another, and paste the text from the scrapbook. Other routines in the toolbox, such as the calculator, could also operate simultaneously with applications programs.

Late in the design of the Macintosh software, the designers realized that, to market the Macintosh in non-English-speaking countries, an easy way of translating text in programs into foreign languages was needed. Thus computer code and data were separated in the software to allow translation without unraveling a complex computer program, by scanning the data portion of a program. No programmer would be needed for translation.

Placing an Early Bet on the 68000 Chip

The 68000, with a 16-bit data bus and 32-bit internal registers and a 7.83-megahertz clock, could grab data in relatively large chunks. Mr. Smith dispensed with separate controllers for the mouse, the disk drives, and other peripheral functions. “We were able to leverage off slave devices,” Mr. Smith explained, “and we had enough throughput to deal with those devices in a way that appeared concurrent to the user.”

When Mr. Smith suggested implementing the mouse without a separate controller, several members of the design team argued that if the main microprocessor was interrupted each time the mouse was moved, the movement of the cursor on the screen would always lag. Only when Mr. Smith got the prototype up and running were they convinced it would work.

Likewise, in the second prototype, the disk drives were controlled by the main microprocessor. “In other computers,” Mr. Smith noted, “the disk controller is a brick wall between the disk and the CPU, and you end up with a poor-performance, expensive disk that you can lose control of. It’s like buying a brand-new car complete with a chauffeur who insists on driving everywhere.

The 68000 was assigned many duties of the disk controller and was linked with a disk-controller circuit built by Mr. Wozniak for the Apple II. “Instead of a wimpy little 8-bit microprocessor out there, we have this incredible 68000—it’s the world’s best disk controller,” Mr. Smith said.

Direct-memory-access circuitry was designed to allow the video screen to share RAM with the 68000. Thus the 68000 would have access to RAM at half speed during the live portion of the horizontal line of the video screen and at full speed during the horizontal and vertical retrace. [See diagram, below.]

The 68000 microprocessor, which has exclusive access to the read-only memory of the Macintosh, fetches commands from ROM at full speed—.83 megahertz. The 68000 shares the random-access memory with the video and sound circuitry, having access to RAM only part of the time [A]; it fetches instructions from RAM at an average speed of about 6 megahertz. The video and sound instructions are loaded directly into the video-shift register or the sound-counter, respectively. Much of the “glue” circuitry of the Macintosh is contained in eight programmable-array-logic chips. The Macintosh’s ability to play four independent voices was added relatively late in the design, when it was realized that most of the circuitry needed already existed in the video circuitry [B]. The four voices are added in software and the digital samples stored in memory. During the video retrace, sound data is fed into the sound buffer.

While building the next prototype, Mr. Smith saw several ways to save on digital circuitry and increase the execution speed of the Macintosh. The 68000 instruction set allowed Mr. Smith to embed subroutines in ROM. Since the 68000 has exclusive use of the address and data buses of the ROM, it has access to the ROM routines at up to the full clock speed. The ROM serves somewhat as a high-speed cache memory. While building the next prototype, Mr. Smith saw several ways to save on digital circuitry and increase the execution speed of the Macintosh. The 68000 instruction set allowed Mr. Smith to embed subroutines in ROM. Since the 68000 has exclusive use of the address and data buses of the ROM, it has access to the ROM routines at up to the full clock speed. The ROM serves somewhat as a high-speed cache memory.

The next major revision in the original concept of the Macintosh was made in the computer’s display. Mr. Raskin had proposed a computer that could be hooked up to a standard television set. However, it became clear early on that the resolution of television display was too coarse for the Macintosh. After a bit of research, the designers found they could increase the display resolution from 256 by 256 dots to 384 by 256 dots by including a display with the computer. This added to the estimated price of the Macintosh, but the designers considered it a reasonable tradeoff.

To keep the parts count low, the two input/output ports of the Macintosh were to be serial. The decision to go with this was a serious one, since the future usefulness of the computer depended largely on its efficiency when hooked up to printers, local-area networks, and other peripherals. In the early stages of development, the Macintosh was not intended to be a business product, which would have made networking a high priority.

“We had an image problem. We wore T-shirts and blue jeans with holes in the knees, and we had a maniacal conviction that we were right about the Macintosh, and that put some people off.”

—Chris Espinosa

The key factor in the decision to use one high-speed serial port was the introduction in the spring of 1981 of the Zilog Corp.’s 85530 serial-communications controller, a single chip to replace two less expensive conventional parts—” vanilla” chips—in the Macintosh. The risks in using the Zilog chip were that it had not been proven in the field and it was expensive, almost $9 apiece. In addition, Apple had a hard time convincing Zilog that it seriously intended to order the part in high volumes for the Macintosh.

“We had an image problem,” explained Mr. Espinosa. “We wore T-shirts and blue jeans with holes in the knees, and we had a maniacal conviction that we were right about the Macintosh, and that put some people off. Also, Apple hadn’t yet sold a million Apple IIs. How were we to convince them that we would sell a million Macs?”

In the end, Apple got a commitment from Zilog to supply the part, which Mr. Espinosa attributes to the negotiating talents of Mr. Jobs. The serial input/output ports “gave us essentially the same bandwidth that a memory-mapped parallel port would,” Mr. Smith said. Peripherals were connected to serial ports in a daisy-chain configuration with the Apple bus network.

Designing the Mac’s Factory Without the Product

In the fall of 1981, as Mr. Smith worked on the fourth Macintosh prototype, the design for the Macintosh factory was getting under way. Mr. Jobs hired Debi Coleman, who was then working as financial manager at Hewlett-Packard Co. in Cupertino, Calif., to handle the finances of the Macintosh project. A graduate of Stanford University with a master’s degree in business administration, Ms. Coleman was a member of a task force at HP that was studying factories, quality management, and inventory management. This was good training for Apple, for Mr. Jobs was intent on using such concepts to build a highly automated manufacturing plant for the Macintosh in the United States.

Briefly he considered building the plant in Texas, but since the designers were to work closely with the manufacturing team in the later stages of the Macintosh design, he decided to locate the plant at Fremont, Calif., less than a half-hour’s drive from Apple’s Cupertino headquarters.

Mr. Jobs and other members of the Macintosh team made frequent tours of automated plants in various industries, particularly in Japan. At long meetings held after the visits, the manufacturing group discussed whether to borrow certain methods they had observed.

The Macintosh factory borrowed assembly ideas from other computer plants and other industries. A method of testing the brightness of cathode-ray tubes was borrowed from television manufacturers.

The Macintosh factory design was based on two major concepts. The first was “just-in-time” inventory, calling for vendors to deliver parts for the Macintosh frequently, in small lots, to avoid excessive handling of components at the factory and reduce damage and storage costs. The second concept was zero-defect parts, with any defect on the manufacturing line immediately traced to its source and rectified to prevent recurrence of the error.

The factory, which was to churn out about a half million Macintosh computers a year (the number kept increasing), was designed to be built in three stages: first, equipped with stations for workers to insert some Macintosh components, delivered to them by simple robots; second, with robots to insert components instead of workers; and third, many years in the future, with “integrated” automation, requiring virtually no human operators. In building the factory, “Steve was willing to chuck all the traditional ideas about manufacturing and the relationship between design and manufacturing,” Ms. Coleman noted. “He was willing to spend whatever it cost to experiment in this factory. We planned to have a major revision every two years.”

By late 1982, before Mr. Smith had designed the final Macintosh prototype, the designs of most of the factory’s major subassemblies were frozen, and the assembly stations could be designed. About 85 percent of the components on the digital-logic printed-circuit board were to be inserted automatically, and the remaining 15 percent were to be surface-mounted devices inserted manually at first and by robots in the second stage of the factory. The production lines for automatic insertion were laid out to be flexible; the number of stations was not defined until trial runs were made. The materials-delivery system, designed with the help of engineers recruited from Texas Instruments in Dallas, Texas, divided small and large parts between receiving doors at the materials distribution center. The finished Macintoshes coming down the conveyor belt were to be wrapped in plastic and stuffed into boxes using equipment adapted from machines used in the wine industry for packaging bottles.

Most of the discrete components in the Macintosh are inserted automatically into the printed-circuit boards.

As factory construction progressed, pressure built on the Macintosh design team to deliver a final prototype. The designers had been working long hours but with no deadline set for the computer’s introduction. That changed in the middle of 1981, after Mr. Jobs imposed a tough and sometimes unrealistic schedule, reminding the team repeatedly that “real artists ship” a finished product. In late 1981, when IBM announced its personal computer, the Macintosh marketing staff began to refer to a “window of opportunity” that made it urgent to get the Macintosh to customers.

“We had been saying, ‘We’re going to finish in six months’ for two years,” Mr. Hertzfeld recalled.

The new urgency led to a series of design problems that seemed to threaten the Macintosh dream.

The Mac Team Faces Impossible Deadlines

The computer’s circuit density was one bottleneck. Mr. Smith had trouble paring enough circuitry off his first two prototypes to squeeze them onto one logic board. In addition, he needed faster circuitry for the Macintosh display. The horizontal resolution was only 384 dots—not enough room for the 80 characters of text needed for the Macintosh to compete as a word processor. One suggested solution was to use the word-processing software to allow an 80-character line to be seen by horizontal scrolling. However, most standard computer displays were capable of holding 80 characters, and the portable computers with less capability were very inconvenient to use.

Another problem with the Macintosh display was its limited dot density. Although the analog circuitry, which was being designed by Apple engineer George Crow, accommodated 512 dots on the horizontal axis, Mr. Smith’s digital circuitry—which consisted of bipolar logic arrays—did not operate fast enough to generate the dots. Faster bipolar circuitry was considered but rejected because of its high-power dissipation and its cost. Mr. Smith could think of but one alternative: combine the video and other miscellaneous circuitry on a single custom n-channel MOS chip.

Mr. Smith began designing such a chip in February 1982. During the next six months the size of the hypothetical chip kept growing. Mr. Jobs set a shipping target of May 1983 for the Macintosh but, with a backlog of other design problems, Burrell Smith still had not finished designing the custom chip, which was named after him: the IBM (Integrated Burrell Machine) chip.

Meanwhile, the Macintosh offices were moved from Texaco Towers to more spacious quarters at the Apple headquarters, since the Macintosh staff had swelled to about 40. One of the new employees was Robert Belleville, whose previous employer was the Xerox Palo Alto Research Corp. At Xerox he had designed the hardware for the Star workstation—which, with its windows, icons. and mouse, might be considered an early prototype of the Macintosh. When Mr. Jobs offered him a spot on the Macintosh team, Mr. Belleville was impatiently waiting for authorization from Xerox to proceed on a project he had proposed that was similar to the Macintosh—a low-cost version of the Star.

Asthe new head of the Macintosh engineering, Mr. Belleville faced the task of directing Mr. Smith, who was proceeding on what looked more and more like a dead-end course. Despite the looming deadlines, Mr. Belleville tried a soft-sell approach.

“I asked Burrell if he really needed the custom chip,” Mr. Belleville recalled. “He said yes. I told him to think about trying something else.”

After thinking about the problem for three months, Mr. Smith concluded in July 1982 that “the difference in size between this chip and the state of Rhode Island is not very great.” He then set out to design the circuitry with higher-speed programmable-array logic—as he had started to do six months earlier. He had assumed that higher resolution in the horizontal video required a faster clock speed. But he realized that he could achieve the same effect with clever use of faster bipolar-logic chips that had become available only a few months earlier. By adding several high-speed logic circuits and a few ordinary circuits, he pushed the resolution up to 512 dots.

Another advantage was that the PALs were a mature technology and their electrical parameters could tolerate large variations from the specified values, making the Macintosh more stable and more reliable—important characteristics for a so-called appliance product. Since the electrical characteristics of each integrated circuit may vary from those of other ICs made in different batches, the sum of the variances of 50or so components in a computer may be large enough to threaten the system’s integrity.

“It became an intense and almost religious argument about the purity of the system’s design versus the user’s freedom to configure the system as he liked. We had weeks of argument over whether to add a few pennies to the cost of the machine.”

—Chris Espinosa

Even as late as the summer of 1982, with one deadline after another blown, the Macintosh designers were finding ways of adding features to the computer. After the team disagreed over the choice of a white background for the video with black characters or the more typical white-on-black, it was suggested that both options be made available to the user through a switch on the back of the Macintosh. But this compromise led to debates about other questions.

“It became an intense and almost religious argument,” recalled Mr. Espinosa, “about the purity of the system’s design versus the user’s freedom to configure the system as he liked. We had weeks of argument over whether to add a few pennies to the cost of the machine.”

The designers, being committed to the Macintosh, often worked long hours to refine the system. A programmer might spend many night hours to reduce the time needed to format a disk from three minutes to one. The reasoning was that expenditure of a Macintosh programmer’s time amounted to little in comparison with a reduction of two minutes in the formatting time. “If you take two extra minutes per user, times a million people, times 50 disks to format, that’s a lot of the world’s time,” Mr. Espinosa explained.

But if the group’s commitment to refinements often kept them from meeting deadlines, it paid off in tangible design improvements. “There was a lot of competition for doing something very bright and creative and amazing,” said Mr. Espinosa. “People were so bright that it became a contest to astonish them.”

The Macintosh team’s approach to working—“like a Chautauqua, with daylong affairs where people would sit and talk about how they were going to do this or that’”—sparked creative thinking about the Macintosh’s capabilities. When a programmer and a hardware designer started to discuss how to implement the sound generator, for instance, they were joined by one of several nontechnical members of the team—marketing staff, finance specialists, secretaries—who remarked how much fun it would be if the Macintosh could sound four distinct voices at once so the user could program it to play music. That possibility excited the programmer and the hardware engineer enough to spend extra hours in designing a sound generator with four voices.

The payoff of such discussions with nontechnical team members, Mr. Espinosa said, “was coming up with all those glaringly evident things that only somebody completely ignorant could come up with. If you immerse yourself in a group that doesn’t know the technical limitations, then you get a group mania to try and deny those limitations. You start trying to do the impossible—and once in a while succeeding.”

Nobody had even considered designing a four-voice [sound] generator—that is, not until “group mania” set in.

The sound generator in the original Macintosh was quite simple—a one-bit register connected to a speaker. To vibrate the speaker, the programmer wrote a software loop that changed the value of the register from one to zero repeatedly. Nobody had even considered designing a four-voice generator—that is, not until “group mania” set in.

Mr. Smith was pondering this problem when he noticed that the video circuitry was very similar to the sound-generator circuitry. Since the video was bit-mapped, a bit of memory represented one dot on the video screen. The bits that made up a complete video image were held in a block of RAM and fetched by a scanning circuit to generate the image. Sound circuitry required similar scanning, with data in memory corresponding to the amplitude and frequency of the sound emanating from the speaker. Mr. Smith reasoned that by adding a pulse-width-modulator circuit, the video circuitry could be used to generate sound during the last microsecond of the horizontal retrace—the time it took the electron beam in the cathode-ray tube of the display to move from the last dot on each line to the first dot of the next line. During the retrace the video-scanning circuitry jumped to a block of memory earmarked for the amplitude value of the sound wave, fetched bytes, deposited them in a buffer that fed the sound generator, and then jumped back to the video memory in time for the next trace. The sound generator was simply a digital-to-analog converter connected to a linear amplifier.

To enable the sound generator to produce four distinct voices, software routines were written and embedded in ROM to accept values representing four separate sound waves and convert them into one complex wave. Thus a programmer writing applications programs for the Macintosh could specify separately each voice without being concerned about the nature of the complex wave.

Gearing up to Build Macs

In the fall of 1982, as the factory was being built and the design of the Macintosh was approaching its final form, Mr. Jobs began to play a greater role in the day-to-day activities of the designers. Although the hardware for the sound generator had been designed, the software to enable the computer to make sounds had not yet been written by Mr. Hertzfeld, who considered other parts of the Macintosh software more urgent. Mr. Jobs had been told that the sound generator would be impressive, with the analog circuitry and the speaker having been upgraded to accommodate four voices. But since this was an additional hardware expense, with no audible results at that point, one Friday Mr. Jobs issued an ultimatum: “If I don’t hear sound out of this thing by Monday morning, we’re ripping out the amplifier.”

That motivation sent Mr. Hertzfeld to the office during the weekend to write the software. By Sunday afternoon only three voices were working. He telephoned his colleague Mr. Smith and asked him to stop by and help optimize the software.

“Do you mean to tell me you’re using subroutines!” Burrell Smith exclaimed after examining the problem. “No wonder you can’t get four voices. Subroutines are much too slow.”

“Do you mean to tell me you’re using subroutines!” Mr. Smith exclaimed after examining the problem. “No wonder you can’t get four voices. Subroutines are much too slow.”

By Monday morning, the pair had written the microcode programs to produce results that satisfied Mr. Jobs.

Although Mr. Jobs’s input was sometimes hard to define, his instinct for defining the Macintosh as a product was important to its success, according to the designers. “He would say, ‘This isn’t what I want. I don’t know what I want, but this isn’t it.’” Mr. Smith said.

“He knows what great products are,” noted Mr. Hertzfeld. “He intuitively knows what people want.’’

One example was the design of the Macintosh casing, when clay models were made to demonstrate various possibilities. “I could hardly tell the difference between two models,” Mr. Hertzfeld said. “Steve would walk in and say, ‘This one stinks and this one is great.’ And he was usually right.”

Because Mr. Jobs placed great emphasis on packaging the Macintosh to occupy little space on a desk, a vertical design was used, with the disk drive placed underneath the CRT.

Mr. Jobs also decreed that the Macintosh contain no fans, which he had tried to eliminate from the original Apple computer. A vent was added to the Macintosh casing to allow cool air to enter and absorb heat from the vertical power supply, with hot air escaping at the top. The logic board was horizontally positioned.

Mr. Jobs, however, at times gave unworkable orders. When he demanded that the designers reposition the RAM chips on an early printed-circuit board because they were too close together, “most people chortled,” one designer said. The board was redesigned with the chips farther apart, but it did not work because the signals from the chips took too long to propagate over the increased distance. The board was redesigned again to move the chips back to their original position.

Stopping the Radiation Leaks

When the design group started to concentrate on manufacturing, the most imposing task was preventing radiation from leaking from the Macintosh’s plastic casing. At one time the fate of the Apple II had hung in the balance as its designers tried unsuccessfully to meet the emissions standards of the Federal Communications Commission. “I quickly saw the number of Apple II components double when several inductors and about 50 capacitors were added to the printed-circuit boards,” Mr. Smith recalled. With the Macintosh, however, he continued, “we eliminated all of the discrete electronics by going to a connector-less and solder-less design; we had had our noses rubbed in the FCC regulations, and we knew how important that was.’’ The highspeed serial I/O ports caused little interference because they were easy to shield.

Another question that arose toward the end of the design was the means of testing the Macintosh. In line with the zero-defect concept, the Macintosh team devised software for factory workers to use in debugging faults in the printed-circuit boards, as well as self-testing routines for the Macintosh itself.

The disk controller is tested with the video circuits. Video signals sent into the disk controller are read by the microprocessor. “We can display on the screen the pattern we were supposed to receive and the pattern we did receive when reading off the disk,” Mr. Smith explained, “and other kinds of prepared information about errors and where they occurred on the disk.’’

To test the printed-circuit boards in the factory, the Macintosh engineers designed software for a custom bed-of-nails tester that checks each computer in only a few seconds, faster than off-the-shelf testers. If a board fails when a factory worker places it on the tester, the board is handed to another worker who runs a diagnostic test on it. A third worker repairs the board and returns it to the production line.

Each Macintosh is burned in—that is, turned on and heated—to detect the potential for early failures before shipping, thus increasing the reliability of the computers that are in fact shipped.

When Apple completed building the Macintosh factory, at an investment of $20 million, the design team spent most of its time there, helping the manufacturing engineers get the production lines moving. Problems with the disk drives in the middle of 1983 required Mr. Smith to redesign his final prototype twice.

Some of the plans for the factory proved troublesome, according to Ms. Coleman. The automatic insertion scheme for discrete components was unexpectedly difficult to implement. Many of the precise specifications for the geometric and electrical properties of the parts had to be reworked several times. Machines proved to be needed to align many of the parts before they were inserted. Although the machines, at $2000 apiece, were not expensive, they were a last-minute requirement.

The factory had few major difficulties with its first experimental run in December 1983, although the project had slipped from its May 1983 deadline. Often the factory would stop completely while engineers busily traced the faults to the sources—part of the zero-defect approach. Mr. Smith and the other design engineers virtually lived in the factory that December.

In January 1984 the first salable Macintosh computer rolled off the line. Although the production rate was erratic at first, it has since settled at one Macintosh every 27 seconds—about a half million a year.

An Unheard of $30 Million Marketing Budget

The marketing of the Macintosh shaped up much like the marketing of a new shampoo or soft drink, according to Mike Murray, who was hired in 1982 as the third member of the Macintosh marketing staff. “If Pepsi has two times more shelf space than Coke,” he explained, “you will sell more Pepsi. We want to create shelf space in your mind for the Macintosh.’’

To create that space on a shelf already crowded by IBM, Tandy, and other computer companies, Apple launched an aggressive advertising campaign—its most expensive ever.

Mr. Murray proposed the first formal marketing budget for the Macintosh in late 1983: he asked for $40 million. “People literally laughed at me,” he recalled. “They said, ‘What kind of a yo-yo is this guy?’ “He didn’t get his $40 million budget, but he got close to it—$30 million.

“We’ve established a beachhead with the Macintosh. If IBM knew in their heart of hearts how aggressive and driven we are, they would push us off the beach right now.”

—Mike Murray

The marketing campaign started before the Macintosh was introduced. Television viewers watching the Super Bowl football game in January 1984 saw a commercial with the Macintosh overcoming Orwell’s nightmare vision of 1984.

Other television advertisements, as well as magazine and billboard ads, depicted the Macintosh as being easy to learn to use. In some ads, the Mac was positioned directly alongside IBM’s personal computer. Elaborate color foldouts in major magazines pictured the Macintosh and members of the design team.

“The interesting thing about this business,” mused Mr. Murray, “is that there is no history. The best way is to come in really smart, really understand the fundamentals of the technology and how the software dealers work, and then run as fast as you can.’’

The Mac Team Disperses

“We’ve established a beachhead with the Macintosh,” explained Mr. Murray. “We’re on the beach. If IBM knew in their heart of hearts how aggressive and driven we are, they would push us off the beach right now, and I think they’re trying. The next 18 to 24 months is do-or-die time for us.”

With sales of the Lisa workstation disappointing, Apple is counting on the Macintosh to survive. The ability to bring out a successful family of products is seen as a key to that goal, and the company is working on a series of Macintosh peripherals—printers, local-area networks, and the like. This, too, is proving both a technical and organizational challenge.

“Once you go from a stand-alone system to a networked one, the complexity increases enormously,” noted Mr. Murray. “We cannot throw it all out into the market and let people tell us what is wrong with it. We have to walk before we can run.”

Only two software programs were written by Apple for the Macintosh—Macpaint, which allows users to draw pictures with the mouse, and Macwrite, a word-processing program. Apple is counting on independent software vendors to write and market applications programs for the Macintosh that will make it a more attractive product for potential customers. The company is also modifying some Lisa software for use on Macintosh and making versions of the Macintosh software to run on the Lisa.

Meanwhile the small, coherent Macintosh design team is no longer. “Nowadays we’re a large company,” Mr. Smith remarked.

“The pendulum of the project swings,” explained Mr. Hertzfeld, who has taken a leave of absence from Apple. “Now the company is a more mainstream organization, with managers who have managers working for them. That’s why I’m not there, because I got spoiled” working on the Macintosh design team.

From Your Site Articles

Related Articles Around the Web

Source link

Leave a Reply