Chipmaker Advanced Micro Devices Inc. is taking on Nvidia Corp. with the launch of a new artificial intelligence accelerator chip, announced at its Data Center & AI Technology Premiere event today.

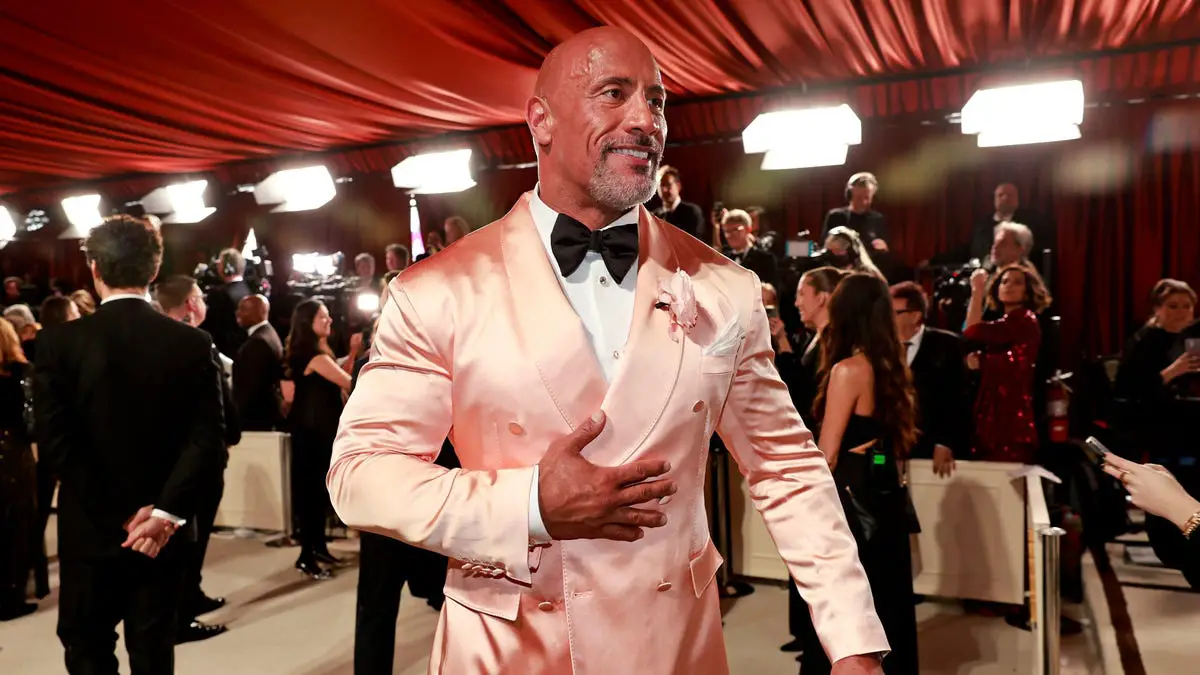

During a keynote speech, AMD Chair and Chief Executive Lisa Su (pictured) announced the company’s new Instinct MI300X accelerator, which is targeted specifically at generative AI workloads. Generative AI is the technology that underpins OpenAI’s famed chatbot ChatGPT, which responds to questions and prompts in a humanlike, conversational way.

Su had previously described the MI300A as the “world’s first data-center integrated CPU + GPU” during a keynote at the 2023 Consumer Electronics Show in January, explaining that it integrates both central processing units and graphics processing units into a single processor. Today, she said customers will be able to use the MI300X to run generative AI models with up to 80 billion parameters. Customers will be able to sample the chip in the third quarter, with production set to ramp up before the end of the year.

Like Nvidia, AMD has said it sees a massive opportunity in an AI space that has been swept up by the hype around ChatGPT, because the technology requires massive amounts of computing power from the data centers that host it. Su reiterated that today, saying that AI is the company’s “most strategic long-term growth opportunity.”

“We think about the data center AI accelerator [market] growing from something like $30 billion this year, at over 50% compound annual growth rate, to over $150 billion in 2027,” Su said.

Alongside the MI300X, Su introduced AMD’s Infinity Architecture Platform, which is a self-contained platform for running generative AI inference and training workloads. It’s powered by eight MI300X chips, she said, and it will rival Nvidia’s DGX supercomputer platform for AI applications.

Analysts have had big expectations of AMD ever since it acquired a chipmaker called Xilinx Inc. for $49 billion in February 2022. During the keynote, AMD President Victor Peng, who previously led Xilinx as its CEO, introduced the company’s ROCm software ecosystem for data center accelerators. ROCm is intended to support the MI300X accelerator and “bring together an open AI software ecosystem,” Peng said.

Once again, AMD is going up against Nvidia’s AI strategy, which is reliant not only on its chip hardware, but also a software ecosystem that helps companies to get the most out of its AI chips.

No doubt AMD’s hardware and software is compelling, but the company’s stock was down more than 3% today on what analysts said was a lack of any major customer announcements regarding the MI300X, or a smaller version called the MI300A. Traditionally, AMD has always showcased big companies that will be early adopters of its new hardware, but it failed to do so today. The company also neglected to provide details on how much the MI300X will cost.

“It can be very difficult for chipmakers to gain a strong footing in additional markets,” said Holger Mueller of Constellation Research Inc. “They tend to be very good at either CPUs or GPUs, with very little middle ground. It will be difficult for AMD to change that, but it is trying.”

“I think the lack of a (large customer) saying they will use the MI300 A or X may have disappointed the Street,” Tirias Research analyst Kevin Krewell told Reuters. “They wanted AMD to say they have replaced Nvidia in some design.”

At present, Nvidia totally dominates the AI computing industry, with a market share of between 80% and 95%, analysts say. The excitement around AI has driven massive gains in Nvidia’s stock, and at the end of last month it became the first chipmaker to reach a $1 trillion market capitalization.

It has very few serious competitors in the market. Intel Corp. and startups such as SambaNova Systems Inc. and Cerebras Systems Inc. all offer competing hardware but have failed to gain much traction. Nvidia’s biggest competitors are probably Alphabet Inc.’s Google Cloud and Amazon Web Services Inc., which have developed internal AI chips and made them available to rent through their respective cloud infrastructure platforms.

Not everyone was so critical of AMD, though. Patrick Moorhead of Moor Insights & Strategy told SiliconANGLE that it’s important to look at the longer-term picture, noting that the hyperscale cloud AI accelerator market is likely to be worth $125 billion in the next five years. It’s there that AMD can make its impact felt, he said, because cloud hyperscalers are too reliant on Nvidia and are uncomfortable with the lack of a broad supply chain.

“Long-term, the cloud players can either create their own chips, continue to sole-source from Nvidia, or work with a second player like AMD,” Moorhead said. “I believe AMD, if it passes muster with its third-quarter sampling, could pick up incremental AI business between the fourth quarter of 2023 and first quarter of 2024. In addition, AI framework providers like PyTorch and foundation model providers like Hugging Face want more options to enhance their innovation too.”

Moorhead also pointed out that, despite Nvidia’s dominant position, most AI inference workloads, those that run the models, are still processed on CPUs rather than GPUs, and that’s unlikely to change anytime soon. “AI spans from the smallest IoT device to smartphones, PCs and the largest hyperscale data centers,” he said. “That AI will be spread across CPUs, GPUs and NPUs up and down the value chain. Whatever way you slice it, AMD will get lift from AI.”

In another announcement today, Su also introduced AMD’s fourth-generation EPYC data center CPU, codenamed Bergamo, which will compete with CPUs from Intel and Nvidia.

AMD is a lot more competitive in the traditional data center market, and the Bergamo chip looks to have some impressive specifications. Su said the 128-core-per-socket CPU excels in terms of energy efficiency while using fewer servers. It also delivers 1.8-times better performance per watt than Intel’s fourth-generation Xeon Platinum CPU, she said.

The company did at least roll out a major customer for the Bergamo chip, with Meta Platforms Inc.’s Vice President of Computing Infrastructure Alexis Bjorlin saying that his company is already using it as an early adopter.

Images: AMD

Your vote of support is important to us and it helps us keep the content FREE.

One-click below supports our mission to provide free, deep and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger and many more luminaries and experts.

THANK YOU

Source link

Leave a Reply