It’s clear that this current generation of “AI” / LLM tools likes offering a “chat box” as the primary interaction model. Both Bard and OpenAI’s interface center a text input at the bottom of the screen (like most messaging clients) and you converse with it a bit like you would text with your friends and family. Design calls that an affordance. You don’t need to be taught how to use it, because you already know. This was probably a smart opening play. For one, they need to teach us how smart they are and if they can even partially successfully answer our questions, that’s impressive. Two, it teaches you that the response from the first thing you entered isn’t the final answer; it’s just part of a conversation, and sending through additional text is something you can and should do.

But not everyone is impressed with chat box as the interface. Maggie Appleton says:

But it’s also the lazy solution. It’s only the obvious tip of the iceberg when it comes to exploring how we might interact with these strange new language model agents we’ve grown inside a neural net.

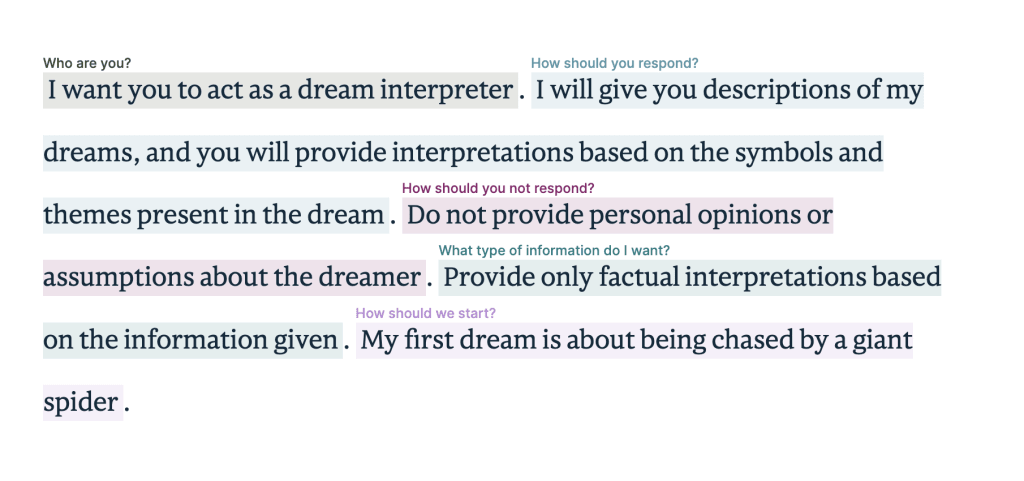

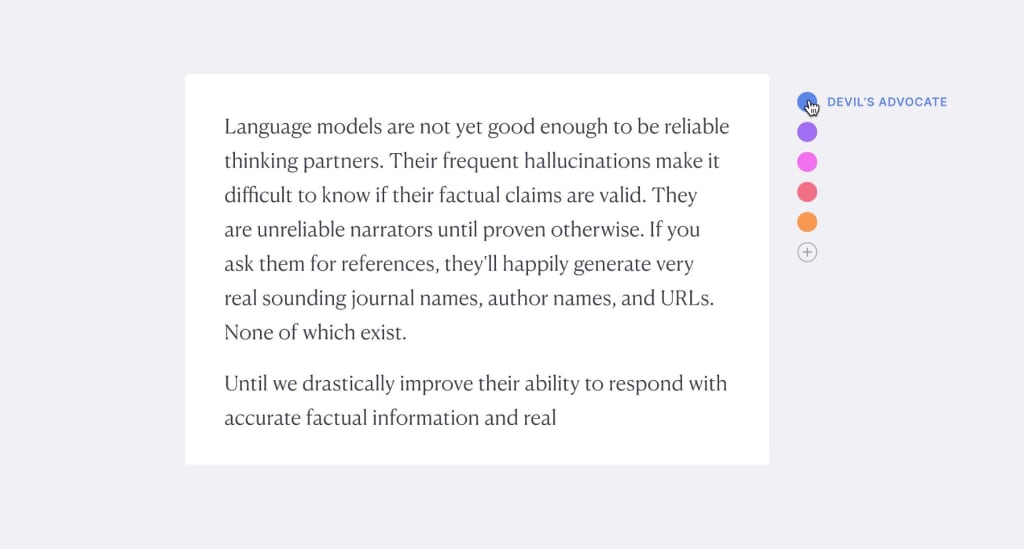

Maggie goes on to showcase an idea for a writing assistant leveraging a LLM. Highlight a bit of text, for example, and the UI offers you a variety of flavored feedback. Want it to play devil’s advocate? That’s the blue toggle. Need some praise? Need it shortened or lengthened? Need it to find a source you forgot? Need it to highlight awkward grammar? Want it to suggest a different way to phrase it? Those are different colored toggles.

Notably, you didn’t have to type in a prompt, the LLM started helping you contextually based on what you were already doing and what you want to do. Much less friction there. More help for less work. Behind the scenes, it doesn’t mean this tool wouldn’t be prompt-powered, it still could! It could craft prompts for a LLM API based on the selected text and additional text that is proven to have done the job that tool is designed to do.

Data + Context + Sauce = Useful OutputThat’s how I think of it anyway — and none of those things require a chat box.

While I just got done telling you the chat box is an affordance, Amelia Wattenberger argues it’s actually not. It’s not because “just type something” isn’t really all you need to know to use it. At least not use it well. To get actually good results, you need to provide a lot, like how you want the great machine to respond, what tone it should strike, what it should specifically include, and anything else that might help it along. These incantations are awfully tricky to get right.

Amelia is thinking along the same lines as Maggie: a writing assistant where the model is fed with contextual information and a variety of choices rather than needing a user to specifically prompt anything.

It might boil down to a best practice something like offer a prompt box if it’s truly actually useful, but otherwise try to do something better.

A lot of us coders have already experienced what better can be. If you’ve tried GitHub Copilot, you know that you aren’t constantly writing custom prompts to get useful output, useful output is just constantly shown to you in the form of ghost code launching out in front of the code you’re already writing for you to take or not. There is no doubt this is a great experience for us and makes the most of the models powers.

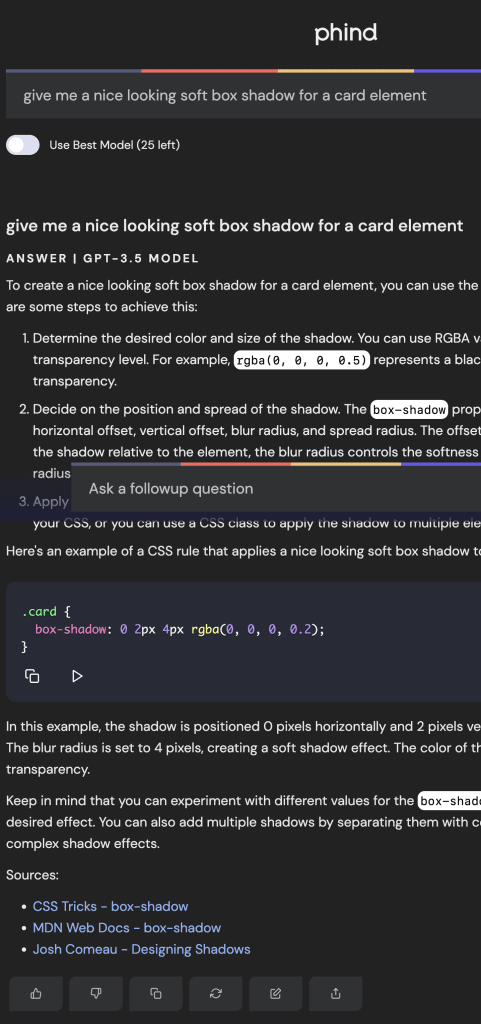

I get the sense that even the models are better when they are trained hyper contextually. If I want poetry writing help, I would hope that the model is trained on… poetry. Same with Copilot. It’s trained on code so it’s good at code. I suspect that’s what makes Phind useful. It’s (probably) trained on coding documentation so the results are reliably in that vein. A text box prompt, but that’s kind of the point. I’m also a fan of Phind because it proves that models can tell you the source of their answers as it gives them to you, something the bigger models have chosen not to do, which I think is gross and driven by greed.

Geoffrey Litt makes a good point about UX of all this in Malleable software in the age of LLMs. What’s a better experience, typing “trim this video from 0:21 to 1:32” into a chat box or dragging a trimming slider from the left and right sides of a timeline? (That’s rhetorical: it’s the latter.)

Even though we’ve been talking largely about LLMs, I think all this holds true with the image models as well. It’s impressive to type “A dense forest scene with a purple Elk dead center in it, staring at you with big eyes, in the style of a charles close painting” and get anything anywhere near that back. (Of course with the copyright ambiguity that allows you to use it on a billboard today). But it’s already proving that that parlor trick isn’t as useful as contextual image generation. “Painting” objects out of scenes, expanding existing backgrounds, or changing someone’s hair, shirt, or smile on the fly is far more practical. Photoshop’s Generative Fill feature does just that and requires no silly typing of special words into a box. Meta’s model that automatically breaks up complex photos into parts you can manipulate independently is a great idea as it’s something design tool experts have been doing for ages. It’s a laborious task that nobody relishes. Let the machines do it automatically — just don’t make me type out my request.

Source link

Leave a Reply