Performance issues can creep up when you least expect them. This can have negative consequences for your customers. As the user base grows, your app can lag because it’s not able to meet the demand. Luckily, there are tools and techniques available to tackle these issues in a timely manner.

We created this article in partnership with Site24x7. Thank you for supporting the partners who make SitePoint possible.

In this take, I’ll explore performance bottlenecks in a .NET 6 application. The focus will be on a performance issue I’ve personally seen in production. The intent is for you to be able to reproduce the issue in your local dev environment and tackle the problem.

Feel free to download the sample code from GitHub or follow along. The solution has two APIs, unimaginatively named First.Api and Second.Api. The first API calls into the second API to get weather data. This is a common use case, because APIs can call into other APIs, so that data sources remain decoupled and can scale individually.

First, make sure you have the .NET 6 SDK installed on your machine. Then, open a terminal or a console window:

> dotnet new webapi --name First.Api --use-minimal-apis --no-https --no-openapi

> dotnet new webapi --name Second.Api --use-minimal-apis --no-https --no-openapi

The above can go in a solution folder like performance-bottleneck-net6. This creates two web projects with minimal APIs, no HTTPS, and no swagger or Open API. The tool scaffolds the folder structure, so please look at the sample code if you need help setting up these two new projects.

The solution file can go in the solution folder. This allows you to open the entire solution via an IDE like Rider or Visual Studio:

dotnet new sln --name Performance.Bottleneck.Net6

dotnet sln add First.ApiFirst.Api.csproj

dotnet sln add Second.ApiSecond.Api.csproj

Next, be sure to set the port numbers for each web project. In the sample code, I’ve set them to 5060 for the first API, and 5176 for the second. The specific number doesn’t matter, but I’ll be using these to reference the APIs throughout the sample code. So, make sure you either change your port numbers or keep what the scaffold generates and stay consistent.

The Offending Application

Open the Program.cs file in the second API and put in place the code that responds with weather data:

var builder = WebApplication.CreateBuilder(args);

var app = builder.Build();

var summaries = new[]

{

"Freezing", "Bracing", "Chilly", "Cool", "Mild", "Warm", "Balmy", "Hot", "Sweltering", "Scorching"

};

app.MapGet("/weatherForecast", async () =>

{

await Task.Delay(10);

return Enumerable

.Range(0, 1000)

.Select(index =>

new WeatherForecast

(

DateTime.Now.AddDays(index),

Random.Shared.Next(-20, 55),

summaries[Random.Shared.Next(summaries.Length)]

)

)

.ToArray()[..5];

});

app.Run();

public record WeatherForecast(

DateTime Date,

int TemperatureC,

string? Summary)

{

public int TemperatureF => 32 + (int)(TemperatureC / 0.5556);

}

The minimal APIs feature in .NET 6 helps keep the code small and succinct. This will loop through one thousand records, and it does a task delay to simulate async data processing. In a real project, this code can call into a distributed cache, or a database, which is an IO-bound operation.

Now, go to the Program.cs file in the first API and write the code that uses this weather data. You can simply copy–paste this and replace whatever the scaffold generates:

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddSingleton(_ => new HttpClient(

new SocketsHttpHandler

{

PooledConnectionLifetime = TimeSpan.FromMinutes(5)

})

{

BaseAddress = new Uri("http://localhost:5176")

});

var app = builder.Build();

app.MapGet("https://www.sitepoint.com/", async (HttpClient client) =>

{

var result = new List<List<WeatherForecast>?>();

for (var i = 0; i < 100; i++)

{

result.Add(

await client.GetFromJsonAsync<List<WeatherForecast>>(

"/weatherForecast"));

}

return result[Random.Shared.Next(0, 100)];

});

app.Run();

public record WeatherForecast(

DateTime Date,

int TemperatureC,

string? Summary)

{

public int TemperatureF => 32 + (int)(TemperatureC / 0.5556);

}

The HttpClient gets injected as a singleton, because this makes the client scalable. In .NET, a new client creates sockets in the underlying operating system, so a good technique is to reuse those connections by reusing the class. Here, the HTTP client sets a connection pool lifetime. This allows the client to hang on to sockets for as long as necessary.

A base address simply tells the client where to go, so make sure this points to the correct port number set in the second API.

When a request comes in, the code loops one hundred times, then calls into the second API. This is to simulate, for example, a number of records necessary to make calls into other APIs. The iterations are hardcoded, but in a real project this can be a list of users, which can grow without limit as the business grows.

Now, focus your attention on the looping, because this has implications in performance theory. In an algorithmic analysis, a single loop has a Big-O linear complexity, or O(n). But, the second API also loops, which spikes the algorithm to a quadratic or O(n^2) complexity. Also, the looping passes through an IO boundary to boot, which dings the performance.

This has a multiplicative effect, because for every iteration in the first API, the second API loops a thousand times. There are 100 * 1000 iterations. Remember, these lists are unbound, which means the performance will exponentially degrade as the datasets grow.

When angry customers are spamming the call center demanding a better user experience, employ these tools to try to figure out what’s going on.

CURL and NBomber

The first tool will help single out which API to focus on. When optimizing code, it’s possible to optimize everything endlessly, so avoid premature optimizations. The goal is to get the performance to be “just good enough”, and this tends to be subjective and driven by business demands.

First, call into each API individually using CURL, for example, to get a feel for the latency:

> curl -i -o /dev/null -s -w %{time_total} http://localhost:5060

> curl -i -o /dev/null -s -w %{time_total} http://localhost:5176

The port number 5060 belongs to the first API, and 5176 belongs to the second. Validate whether these are the correct ports on your machine.

The second API responds in fractions of a second, which is good enough and likely not the culprit. But the first API takes almost two seconds to respond. This is unacceptable, because web servers can timeout requests that take this long. Also, a two-second latency is too slow from the customer’s perspective, because it’s a disruptive delay.

Next, a tool like NBomber will help benchmark the problematic API.

Go back to the console and, inside the root folder, create a test project:

dotnet new console -n NBomber.Tests

cd NBomber.Tests

dotnet add package NBomber

dotnet add package NBomber.Http

cd ..

dotnet sln add NBomber.TestsNBomber.Tests.csproj

In the Program.cs file, write the benchmarks:

using NBomber.Contracts;

using NBomber.CSharp;

using NBomber.Plugins.Http.CSharp;

var step = Step.Create(

"fetch_first_api",

clientFactory: HttpClientFactory.Create(),

execute: async context =>

{

var request = Http

.CreateRequest("GET", "http://localhost:5060/")

.WithHeader("Accept", "application/json");

var response = await Http.Send(request, context);

return response.StatusCode == 200

? Response.Ok(

statusCode: response.StatusCode,

sizeBytes: response.SizeBytes)

: Response.Fail();

});

var scenario = ScenarioBuilder

.CreateScenario("first_http", step)

.WithWarmUpDuration(TimeSpan.FromSeconds(5))

.WithLoadSimulations(

Simulation.InjectPerSec(rate: 1, during: TimeSpan.FromSeconds(5)),

Simulation.InjectPerSec(rate: 2, during: TimeSpan.FromSeconds(10)),

Simulation.InjectPerSec(rate: 3, during: TimeSpan.FromSeconds(15))

);

NBomberRunner

.RegisterScenarios(scenario)

.Run();

The NBomber only spams the API at the rate of one request per second. Then, at intervals, twice per second for the next ten seconds. Lastly, three times per second for the next 15 seconds. This keeps the local dev machine from overloading with too many requests. The NBomber also uses network sockets, so tread carefully when both the target API and the benchmark tool run on the same machine.

The test step tracks the response code and puts it in the return value. This keeps track of API failures. In .NET, when the Kestrel server gets too many requests, it rejects those with a failure response.

Now, inspect the results and check for latencies, concurrent requests, and throughput.

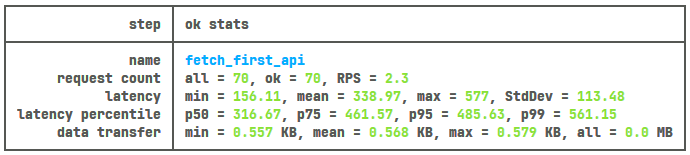

The P95 latencies show 1.5 seconds, which is what most customers will experience. Throughput remains low, because the tool was calibrated to only go up to three requests per second. In a local dev machine, it’s hard to figure out concurrency, because the same resources that run the benchmark tool are also necessary to serve requests.

dotTrace Analysis

Next, pick a tool that can do an algorithmic analysis like dotTrace. This will help further isolate where the performance issue might be.

To do an analysis, run dotTrace and take a snapshot after NBomber spams the API as hard as possible. The goal is to simulate a heavy load to identify where the slowness is coming from. The benchmarks already put in place are good enough so make sure you’re running dotTrace along with NBomber.

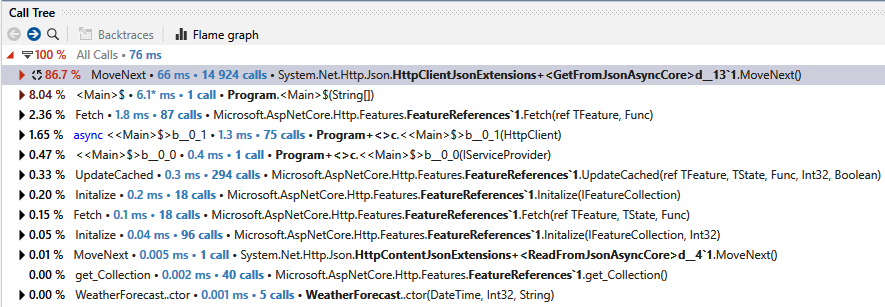

Based on this analysis, roughly 85% of the time is spent on the GetFromJsonAsync call. Poking around in the tool reveals this is coming from the HTTP client. This correlates with the performance theory, because this shows the async looping with O(n^2) complexity could be the problem.

A benchmark tool running locally will help identify bottlenecks. The next step is to use a monitoring tool that can track requests in a live production environment.

Performance investigations are all about gathering information, and they cross check that every tool is saying at least a cohesive story.

Site24x7 Monitoring

A tool like Site24x7 can aid in tackling performance issues.

For this application, you want to focus on the P95 latencies in the two APIs. This is the ripple effect, because the APIs are part of a series of interconnected services in a distributed architecture. When one API starts having performance issues, other APIs downstream can also experience problems.

Scalability is another crucial factor. As the user base grows, the app can start to lag. Tracking normal behavior and predicting how the app scales over time helps. The nested async loop found on this app might work well for N number of users, but may not scale because the number is unbound.

Lastly, as you deploy optimizations and improvements, it’s key to track version dependencies. With each iteration, you must be able to know which version is better or worse for the performance.

A proper monitoring tool is necessary, because issues aren’t always easy to spot in a local dev environment. The assumptions made locally may not be valid in production, because your customers can have a different opinion. Start your 30 day free trial of Site24x7.

A More Performant Solution

With the arsenal of tools so far, it’s time to explore a better approach.

CURL said that the first API is the one having performance issues. This means any improvements made to the second API are negligible. Even though there’s a ripple effect here, shaving off a few milliseconds from the second API won’t make much of a difference.

NBomber corroborated this story by showing the P95s were at almost two seconds in the first API. Then, dotTrace singled out the async loop, because this is where the algorithm spent most of its time. A monitoring tool like Site24x7 would have provided supporting information by showing P95 latencies, scalability over time, and versioning. Likely, the specific version that introduced the nested loop would have spiked latencies.

According to performance theory, quadratic complexity is a big concern, because the performance exponentially degrades. A good technique is to squash the complexity by reducing the number of iterations inside the loop.

One limitation in .NET is that, every time you see an await, the logic blocks and sends only one request at a time. This halts the iteration and waits for the second API to return a response. This is sad news for the performance.

One naive approach is to simply crush the loop by sending all HTTP requests at the same time:

app.MapGet("https://www.sitepoint.com/", async (HttpClient client) =>

(await Task.WhenAll(

Enumerable

.Range(0, 100)

.Select(_ =>

client.GetFromJsonAsync<List<WeatherForecast>>(

"/weatherForecast")

)

)

)

.ToArray()[Random.Shared.Next(0, 100)]);

This will nuke the await inside the loop and blocks only once. The Task.WhenAll sends everything in parallel, which smashes the loop.

This approach might work, but it runs the risk of spamming the second API with too many requests at once. The web server can reject requests, because it thinks it might be a DoS attack. A far more sustainable approach is to cut down iterations by sending only a few at a time:

var sem = new SemaphoreSlim(10);

app.MapGet("https://www.sitepoint.com/", async (HttpClient client) =>

(await Task.WhenAll(

Enumerable

.Range(0, 100)

.Select(async _ =>

{

try

{

await sem.WaitAsync();

return await client.GetFromJsonAsync<List<WeatherForecast>>(

"/weatherForecast");

}

finally

{

sem.Release();

}

})

)

)

.ToArray()[Random.Shared.Next(0, 100)]);

This works much like a bouncer at a club. The max capacity is ten. As requests enter the pool, only ten can enter at one time. This also allows concurrent requests, so if one request exits the pool, another can immediately enter without having to wait on ten requests.

This cuts the algorithmic complexity by a factor of ten and relieves pressure from all the crazy looping.

With this code in place, run NBomber and check the results.

The P95 latencies are now a third of what they used to be. A half-second response is far more reasonable than anything that takes over a second. Of course, you can keep going and optimize this further, but I think your customers will be quite pleased with this.

Conclusion

Performance optimizations are a never-ending story. As the business grows, the assumptions once made in the code can become invalid over time. Therefore, you need tools to analyze, draw benchmarks, and continuously monitor the app to help quell performance woes.

Source link

Leave a Reply